Projects

The following are a list of a few notable projects. For more, view my full Github portfolio here

CollinGPT Series

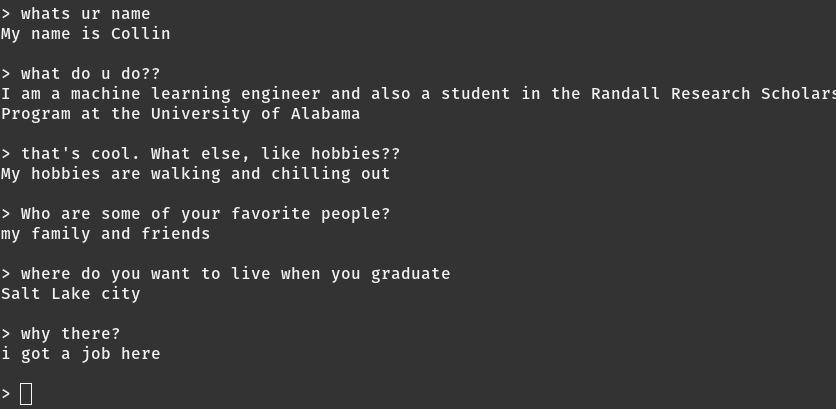

It has become one of my bi-annual tradition at this point to fine-tune a local language model on a dataset I made from my text messages, essays, and journal entries. For me, it has come to serve as not just as a fun program to show to my friends, but also a metric for progress in LLMs and the tooling surrounding them.

My most recent attempt, CollinGPT-2, actually showed a lot of interesting progression. For one, I ended up starting with a base of the Chinese model Qwen-3, very different from the original base of a Llama 2 fine-tune, demonstrating the rise of Chinese companies in the AI space. I was also quite impressed by how easy it was to actually set up the scripts for the training process. I had originally used the Oobabooga Web UI to train my model, but wanted to use a full script to gain greater control and understanding. I found with the transformers, peft, and trl libraries, my training code didn’t end up being longer than about 200 lines, with it being straightforward to load the model and dataset, and add the LoRA for fine-tuning. The training process also was significantly faster owing to the smaller sized but similarly performant model (Qwen 3 8B vs Llama 2 13B) and the modern H200 I rented, only taking around 15-20 minutes. And finally llama.cpp (the GOAT of local LLMs) came in handy at the end to quantize the model and actually run it on my laptop.

As in the first edition of CollinGPT, I ended up somewhat disappointed. While the model retained a lot of my writing style and remembered some bits of information about me, it constantly hallucinated facts about my life even though they were present both in the training data in the system prompt. It was fun to play around with and show to my friends, but it won’t be writing my emails anytime soon. The world will have to wait until 2027 for CollinGPT-3; hopefully the next edition will see improvement on these woes.

SQLLM

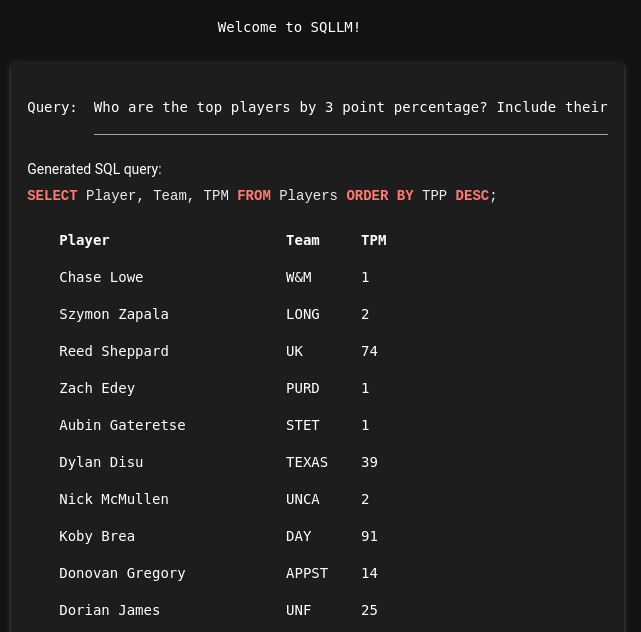

SQLLM is our team’s project submitted to the Georgia Tech Hacklytics Competition in February. I had a great time working with my friend to try and finish this project. We faced numerous setbacks in our short 36 hour window of working, with our other two groupmates being exhausted by the drive and unhealthy hackathon sleeping habits and our great difficulty in deciding on our project idea and tech stack.

Ultimately, we decided to go with a specific track that asked us to build a piece of software that would allow users to more easily interact with medical databases using AI. We focused on a more general database interaction tool in SQLLM. SQLLM, once connected to an existing database, asks users for a simple English language query, then uses CodeLlama-34B to translate this into a read-only query to the database. We also added a developer mode that lets advanced users modify queries before submission and submit queries that modify the database. Our solution was quite user friendly and was impressive considering the time limit involved.